Queuing

QBO installations include a robust queuing infrastructure that wraps and extends existing industry-standing queuing technologies such as SQL Service Broker, AWS Simple Queue Service, Azure’s Storage Queues, or RabbitMQ.

When we designed QBO, using some sort of message queuing to enable a scale-out architecture was an obvious requirement. We had no desire to invent our own messaging infrastructure – there are plenty of proven products to leverage. Today, we use several of them simultaneously, depending on the use cases.

All of the message queuing platforms we explored lacked a handful of features we required:

- Make it easy for power users to queue messages

- An ability to future-queue items (e.g. run this report at 6am)

- A built-in consumer service (e.g. no need to write code to process items in a queue)

- Include scheduling recurring jobs in the infrastructure (e.g. run a report every day at 6am)

- Enable power users to manage error handling (more than move to an error queue)

- Offer throttling for third party interfaces (comply with terms of service)

- Enable prioritization of queues (critical messages processed ahead of regular messages)

Ease of Use

Any QBO operation can be queued, including operations yet to be defined by future clients and power users. A QBO “operation” is a database call, C# method, or plugin, such as:

Any QBO operation can be queued, including operations yet to be defined by future clients and power users. A QBO “operation” is a database call, C# method, or plugin, such as:

- Adding a task

- Generating a document

- Emailing a message

- Invoking a third party web service

- Launching workflows

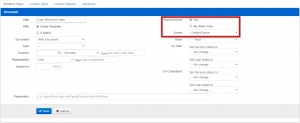

There are several places in the QBO3 UI that allow a power user to configure operations to be called, including whether to queue them and what queue to route the operation to. For example, when designing workflows, the power user is prompted to pick a queue from a drop down menu. Whenever the workflow executes the step, it will simply be queued into the appropriate queue for execution.

There are several places in the QBO3 UI that allow a power user to configure operations to be called, including whether to queue them and what queue to route the operation to. For example, when designing workflows, the power user is prompted to pick a queue from a drop down menu. Whenever the workflow executes the step, it will simply be queued into the appropriate queue for execution.

For the technically inclined power user, one can queue operation from a browser’s address bar. For example:

// Generate a welcome letterAttachment/Attachment.ashx/Queue/Generate?Template=Welcome Letter&...// Generate a welcome letter using the Letter Generation queueAttachment/Attachment.ashx/Queue/Generate?Template=Welcome Letter&QueueName=Letter Generation...

Consumer: QBO Queue Service

Message queuing is useless without a consumer to pop messages and do something with them. QBO’s Queue Service is a Windows service that runs on an application server, and can be configured to monitor queues. The queue service pops messages from the queues, executing the operation by passing it to the QBO framework. For example:

// This generates an attachment in QBO by calling a web server Attachment/Attachment.ashx/Generate?Template=Welcome Letter&... // This queues a message to generate an attachment Attachment/Attachment.ashx/Queue/Generate?Template=Welcome Letter&...

The web server will write an Attachment/Generate message to a queue, and shortly thereafter (typically a few milliseconds), the Queue Service will pop this message, and call QBO’s Attachment/Generate method.

The Queue Service can pop and process any QBO operation, including any database manipulation, application function (like generating documents), and calls to third party web services and APIs. Once an operation is configured in QBO, the queuing infrastructure can then be used to scale-out the execution of the operation in parallel across many application servers.

Queues can be configured to be ignored by the Queue Service, so messages pushed to the queue may be popped by a third party consumer. This allows QBO to offer a publisher-subscriber model, ideal for exchanging data between QBO and third party systems in a robust, asynchronous manner.

Future Queuing

A limitation we encountered with ‘off the shelf’ message queuing was a lack of future queuing. It’s often useful to queue operations for execution 15 minutes from now, on the first of the month, daily at 6 am, or such. When QBO queues an operation, if there is a QueueDate specified by the operation, QBO will push the operation to a dedicated “future queue”. The future queue is a special queue, managed by the Queue Service. When the queue date passes, the Queue Service will pop the message and push it to a “normal” queue (Azure, SQS, Service Broker) for processing.

The ability to future queue operations is a powerful layer on top of any of the ‘off the shelf’ message queuing configured to run with QBO.

Scheduling Recurring Jobs

The QBO queuing infrastructure enables scheduling an operation for recurring execution:

// Schedule the running of a report every day at 6 am Report/ReportRequest.ashx/Schedule/Run?Report=Daily Dashboard&Schedule=Daily at 6am&ToAddress=jdoe@acme.com

This will queue an operation to run the next day at 6 am, and include a schedule in the message. When QBO pops a message to execute an operation, if there is a schedule associated with the popped message, QBO will re-queue the operation to execute again at the next run date determined by the schedule. Thus, the ReportReuqest/Run operation will:

- Push

ReportRequest/Runto the future queue to run at 6 am the next day, - Queue Service will pop the message, and run the report, and

- Loop back to step 1.

Schedules can be complex, including the definition of business days, hours of operation, and holidays.

A single job can be associated with a schedule, as well as an entire queue.

Error Handling

QBO is typically used to orchestrate communications between a variety of third party systems, and any system is likely to have hiccups once in a while. FTP sites get busy and refuse connections, VPN tunnels go down for a few seconds, and web APIs occasionally are taken offline for maintenance. QBO’s Queuing infrastructure is designed to handle these types of errors without requiring human intervention.

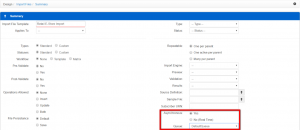

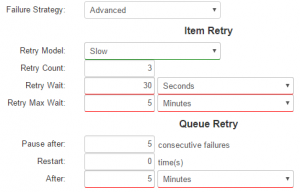

Error handling options for a queue.

In the real world, Quandis has hit a few recurring scenarios that the Queuing module can handle quite elegantly:

- Hiccups: a random error should simply be retried again in a few seconds (e.g. FTP connections refused)

- Third party API performance degraded: consecutive errors implies that QBO may be ‘pushing’ a third party API too hard, so the queuing should automatically slow down

- Cost sensitive operations: each operation incurs some sort of third-party fee, so error handling should err on the side of caution and pause a queue for human intervention earlier than other queues

When a given queue encounters enough consecutive errors, the queue will pause itself, and notify power users (or Quandis engineers). Queues may be configured to automatically restart themselves after a period of time, which Quandis has found very useful for third party APIs that occasionally go offline for unplanned maintenance.

All of these options have been pre-configured and are available from a simple drop down list. Advanced power users are welcome to control each of the data points considered by the Queuing error strategy, tailoring it to their nuanced requirements.

Throttling

Once every year or so, Quandis is asked to integrate with a third party system that either has terms of service that limit the rate at which it can be called, or that is limited in it’s ability to handle concurrency. In these cases, a queue can be configured to limit the number of operations allowed per second (or ms, minute, hour, day, month, year, etc.). This allows QBO to queue as many operations as quickly as users want, using a queue to ‘buffer’ the requests to the third party. For example, some websites can only handle a few dozen users at once, so a screen scraping queue may be configured to throttle screen scrape operations to 1 every 3 seconds.

Prioritization

Queuing typically operates on a First-In-First-Out (FIFO) basis. This works well for most use cases (and in fact is required for many use cases). Occasionally, however, FIFO can be problematic. Queuing implements the concept of prioritization by enabling queues to be dependent on other queues. The Queue Service will not pop messages from the queue unless all queues that it is dependent upon are empty.

For example, assume there is a third-party payment processing system with limited bandwidth, and we’re calling a Payment/Post operation:

- At 6am, a job queues up 100,000 payments to be posted to the payment processing system

- Let’s assume each payment takes 1 second to post, and the system can handle 5 concurrent connections

- It will take about 5.5 hours to process all 100,000 payments

- At 8:30 am, a customer calls in to make an ACH payment over the phone

- we want this payment to “jump” ahead of the 100,000 queued at 6am – this is high priority!

The Queuing infrastructure can handle this use case as follows:

- Define a queue called ‘Payments – High Priority’

- high priority operations such as over-the-phone ACH payments will be queued to this queue

- Define a queue called “Payments”, and set it’s Dependencies to ‘Payments – High Priority’

- regular priority operations, such as our 100,000 payment job, will be queued to this queue

- before popping items from the Payments queue, the Payments – High Priority queue must be empty

- at 8:30 am, this payment is queued to Payments – High Priority, and the Payments queue ‘waits’ for it to be processed

Auto Scaling

Cloud auto-scaling can be leveraged by Queuing. Any queue be be configured to trigger adding application servers to process items in a queue when the queue is backed up. For example, the Quandis Court Connect platform OCRs documents found on court cases to make them searchable. OCR can be a slow process, but our demand is usually low, so our OCR queues are normally handled by 2 application servers. However, if we get a surge of message in the OCR queue, the following happens:

- Queuing logs the number of items in the OCR queue to the cloud (AWS Cloudwatch in our case)

- A large enough number in the logs triggers auto-scaling, starting extra servers to help pop messages from the OCR queue

- Eventually, Queuing logs that the number of items in the OCR queue has been reduced, and auto-scaling stops the extra servers

In real world conditions, we’ve seen our OCR queue trigger adding 100 additional servers to OCR nearly a million documents in about two hours, then revert to the typical 2 servers monitoring our typical load.

Auto-scaling allows you to guarantee a certain throughput for specific operations, regardless of load. It also minimizes cost, allowing you to plan for “normal” loads yet knowing you can still meet Service Level Agreements should a surge in demand strike.

If a power user can configure an operation in QBO, it can easily be queued and auto-scaled to meet virtually unlimited demand.